Enterprise LLM as a Service

Managed Access to Generative AI for your Staff

Everyone agrees that having your staff use AI day to day gives them superpowers, but at what cost and risk? Enterprise LLM as a Service helps manage both cost and risk with an easy to use control panel.

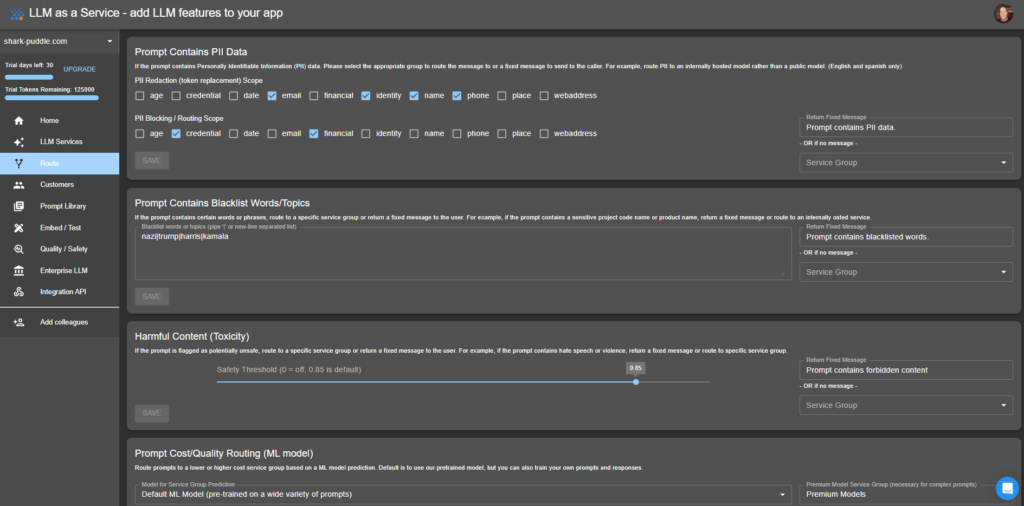

Restrict Confidential and Sensitive Information

Personal identifiable information can be blocked and redacted before being sent to any LLM vendor. You remain in control of what data gets redacted, blocked or replaced based on state of the art machine learning models.

Manage Brand and Policy

Brand and policy guidelines are injected as system instructions automatically for EVERY request. This means that your enterprise voice for any generated material will be consistent and safe.

Full call log and auditing

Validate the safe use of LLM within your enterprise with a full call log, including those that have failed the safety checks. See how LLMs are being used and fine tune what models get used for what prompts to maximize quality while minimizing cost

Smart Routing (optimize speed and cost)

Reduce costs by using our smart model routing. We analyze incoming prompts and route them to different service groups. For example, simple text summarization goes to default models, whilst complex code generation or reasoning prompts get routed to the highest performance models. Typically this extends your LLM budget by 60-80% under typical use.

Token quotas and reporting

Allocate tokens on a user or department basis, controlling costs. This avoids costly surprises at the end of each month, keeping LLM usage within the budget you specify (remember, we route to lower cost models where necessary, making your budget go 60-80% further)

Eliminate Toxic and Unsafe Prompts

Toxic or unsafe prompts are blocked. You get alerted and your staff get protected from Insulting language, hate speech, harassment or abuse, profanity, violence or threat, sexual explicit or graphic prompts. This avoids unwanted surprises in any LLM responses. This is in addition to the policy and other guardrails that vendors also employ.

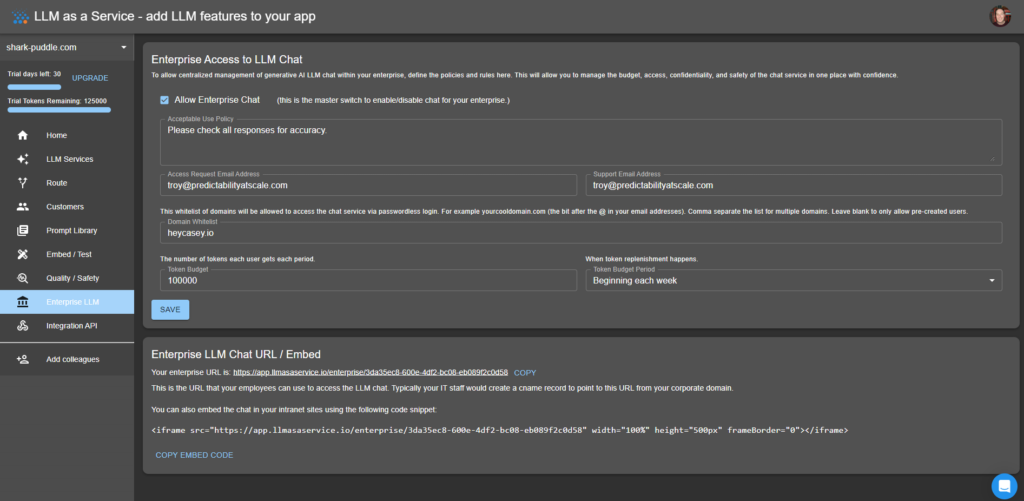

All of the Enterprise LLM Chat features are controlled in one place….

Prompt PII/Confidentiality/Harmful Content can be blocked or redacted

Track costs and savings in the control panel. See calls and monitor availability.