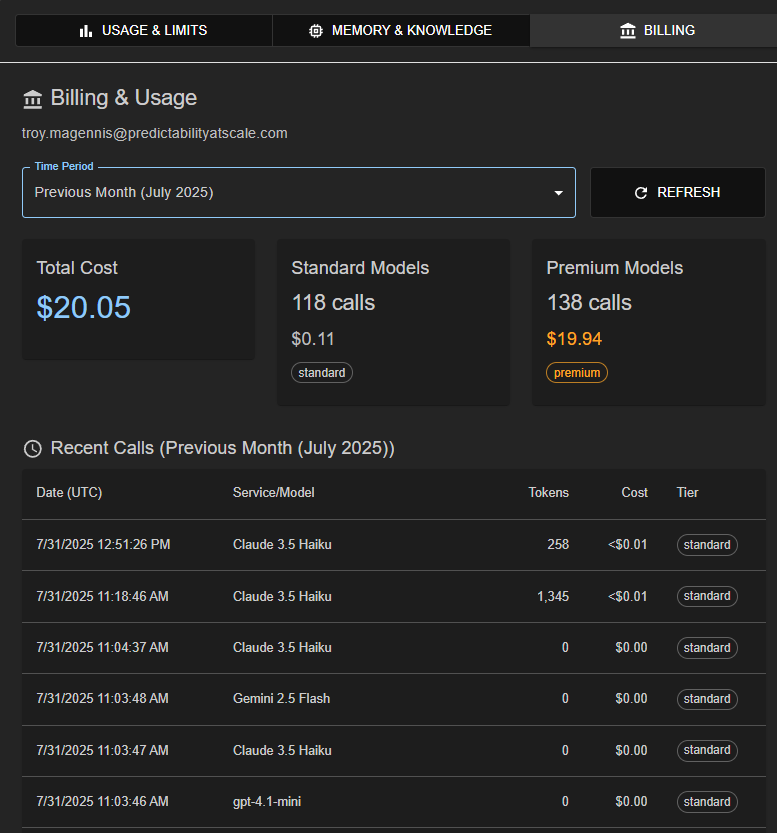

For each customer you can view full usage and billing details. This allows you to view and share important details and bill customers or internal departments based on different use. One use case we use it for in another of … Read More

Author Archives: Troy Magennis

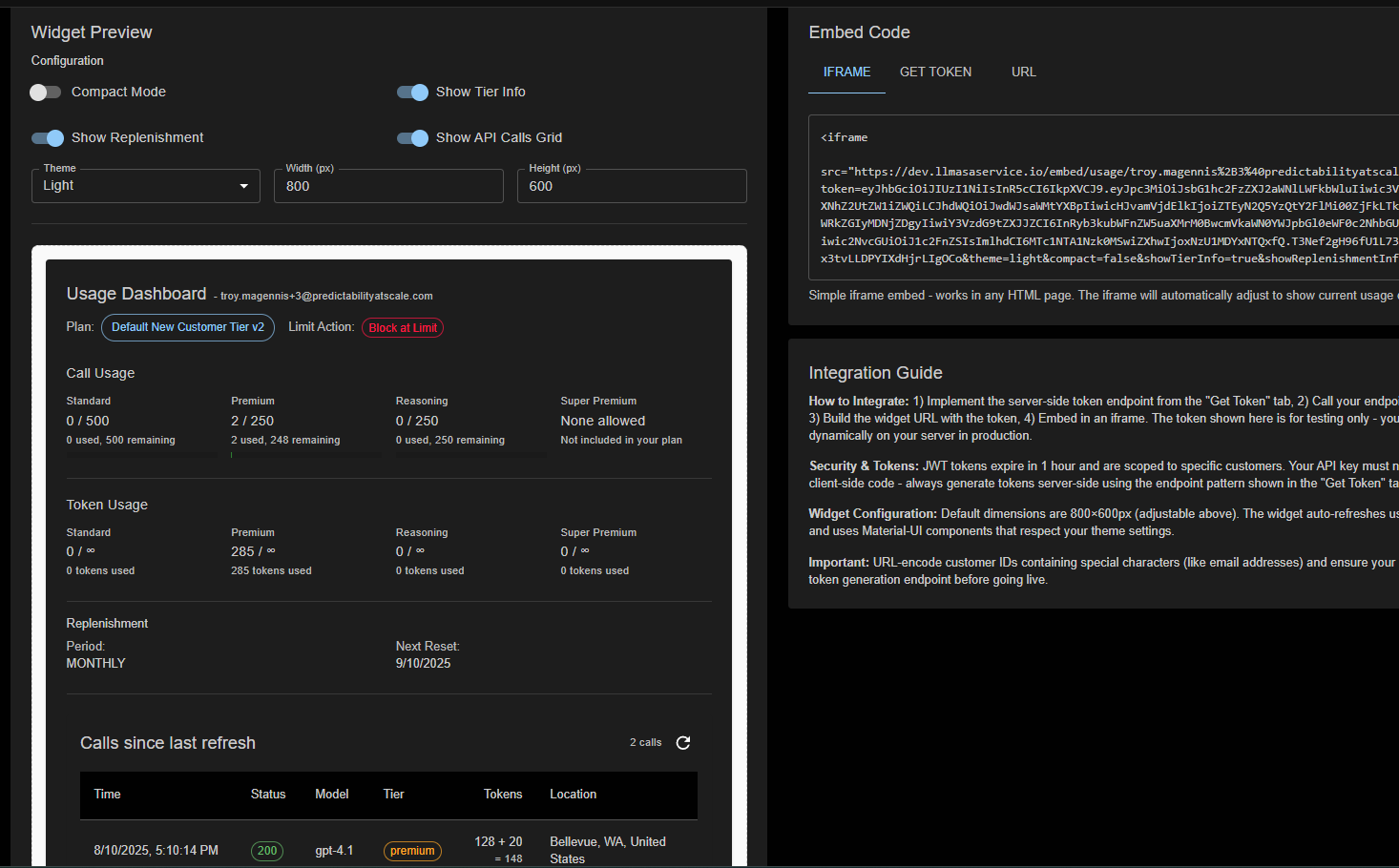

2025-08-13 Customer Usage Widget

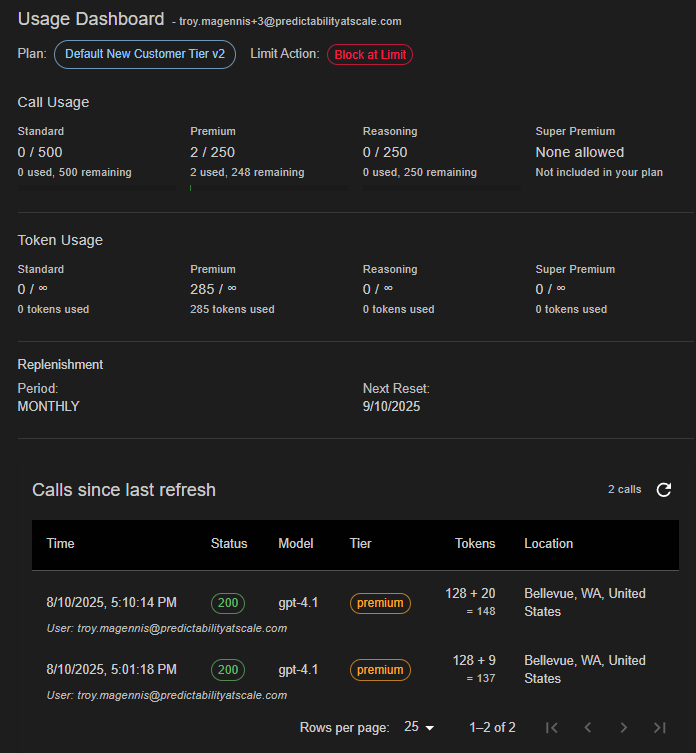

Part of adding the Usage Tiers feature was also allowing you to expose the call and usage data to your customers. To facilitate that we allow you to create and embed widgets into your applications or websites. When you select … Read More

2025-08-12 Usage Tiers

Having trouble setting limits or tracking your customers usage? We’ve got you. Introducing Usage Tiers. The goal of this feature is to allow you to configure different usage tiers that can be applied to customers. You setup and access these … Read More

2025-08-01 New Model Management

When we started to build LLMAsAService.io there were just a few vendors, and just a few models. That time is gone. Models are constantly changing, their price (generally) dropping, and always a new model to introduce. It was hard for … Read More

2025-04-04 Product Update

FEATURE UPDATES 1. Conversations: You will now be able to see the conversations that your customers have had with your agents. We default conversations to ON, but if you don’t want to capture these, turn it off in your agent … Read More

2025-04-27 Product Update Email

YOUR ACTION ITEMS 1. Model updates: It’s been a busy month of new vendor Model releases, mostly led by OpenAI. We’ve looked at the pricing, and here is what we did, and recommend YOUR do – a) If you have a service … Read More

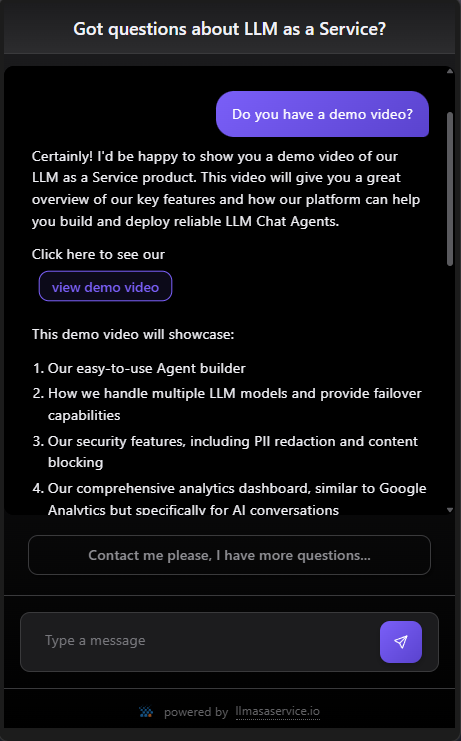

Value Proposition

Develop Quickly build powerful, embeddable Chat Agents using our intuitive control panel. Seamlessly integrate into your application or website—whether you prefer a no-code solution via our iFrame or NPM package, a flexible some-code approach with our client component library, or … Read More

Hosting and Routing to Private Models (like DeepSeek or Llama)

Want to run DeepSeek locally? It takes about 5 minutes and is zero cost. I made this video tutorial on running it locally and integrating it into our LLM as a Service product, enabling routing to different LLM vendors based … Read More

Protecting Brand, Tone and Privacy in LLMs

LLMAsASerivce.io adds system instructions to ALL prompts without any developer having to remember. This can create a consistent brand and tone or ensure higher compliance with a company’s legal policies. This isn’t just about being politically correct. It’s about keeping applications … Read More